System as resources

Example of complexity: the highly variable network

What I’ve done with the systems model on these two pages is very simplistic.

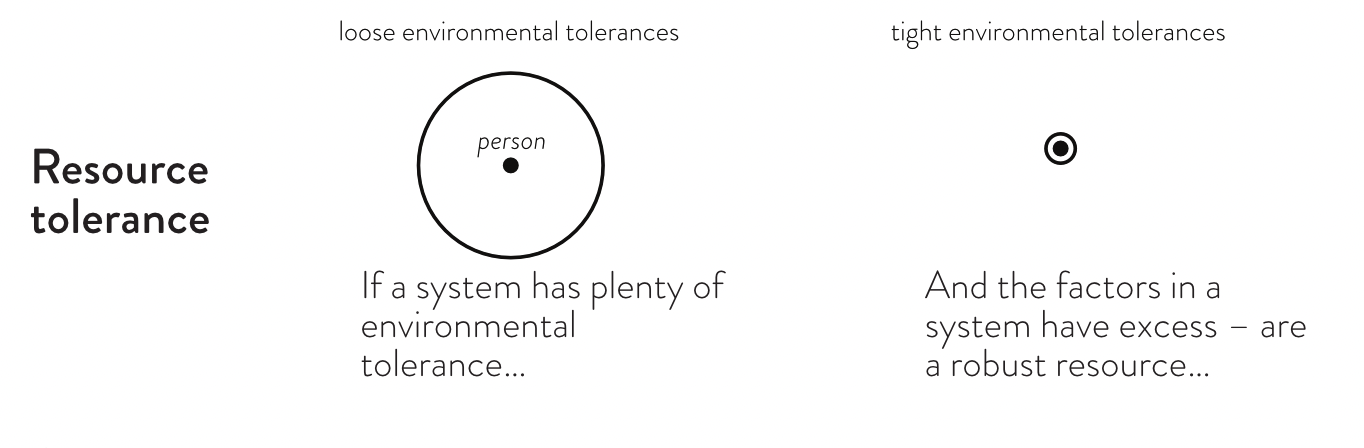

First, I represented resource tolerance. Lots of space, lots of tolerance. Little space, and it takes very little to make it oscillate and collapse.

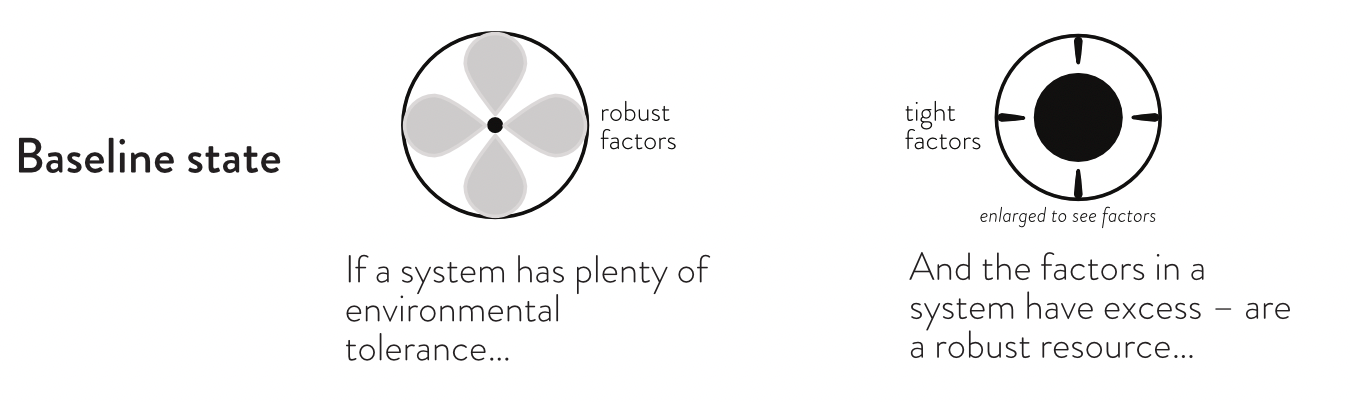

All the factors in the baseline state are equidistant around the circle, whether it’s resource-rich or resource-tight. The tolerance is still indicated.

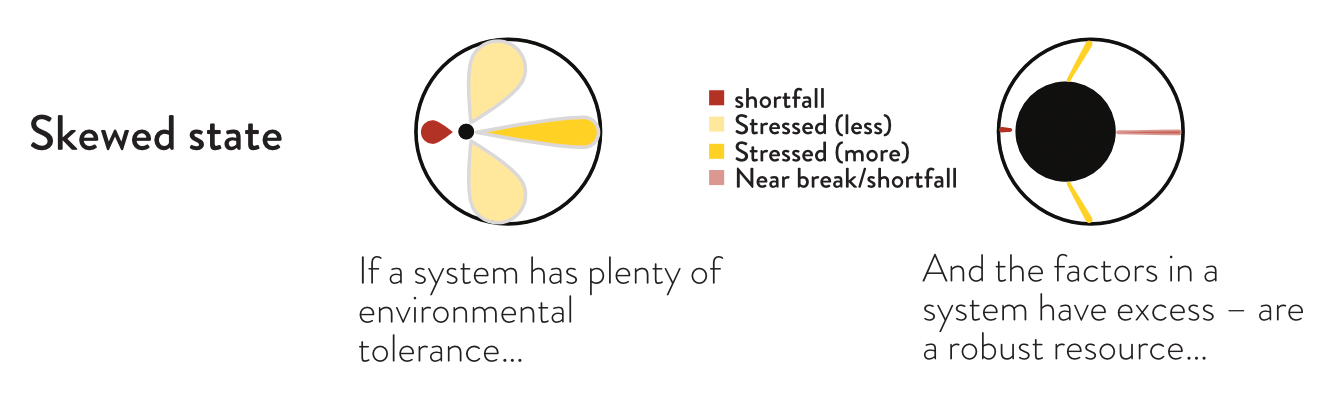

In the skewed state, one factor is in a rigid ‘shortfall’: scaled down in ratio, contracted with the assumption that it can’t stretch. It keeps to its mostly globular form. The node moved over to meet it.

The rest of the factors are assumed to be flexible, shown stretched to reach the outcome. The % change in length calculated and the same % removed from the width. The assumption is that the system will continue functioning, in stress, based on the healthy fungibility of the remaining factors.

The connectome is disregarded, allowing the fungibility of the factors to be highlighted visually.

A real-world system can have more than one factor that is in a rigid or near-rigid shortfall. The factors may need an exaggerated ratio to fill the resource gap. Also, the missing factor may actually be recursive — needed earlier in the process to develop one of the factors that is trying to step in and mitigate the shortfall. That second-in-process factor might make up the shortfall for a while, but eventually it will strain, too.

Factors in a real world system also move more independently across multiple measures; when and how they break can be harder to calculate because they rarely work in a vacuum.

There are a thousand and one other ways that a system can be infinitely more complicated.

This system visualization doesn’t include the connectome. This is one of those choices made in information architecture: show the whole, or show a part to help drive the idea home? Highlighting how efficient resources are closer to breaking as a beginning state is more important, in my estimate, than showing the complexity of the system, here and now. The next section makes more of a deal about the connectome than the factors.

environment, failing information states

Meadows, D.H. (2008). Thinking in Systems. Chelsea Green Publishing.

Taleb, N.N. (2007). The Black Swan: The Impact of the Highly Improbable. Random House.

Taleb, N.N. (2012). Antifragile: Things That Gain from Disorder. Random House.

Wucker, M. (2016). The Gray Rhino: How to Recognize and Act on the Obvious Dangers We Ignore. St Martin's Press.

This one is the tough for me to back into. There is no doubt that the influences have been deep, from many disciplines, and across decades. They include conversations in college, around Improv and macrosocietal shifts in history, anthropology, religion, politics, and more. Often when we were bone-tired, but our minds going too fast to rest. They also include many of the investment managers and economists I rubbed elbows with in my early career; always reading more (because triangulation), but absolutely sparked by the insights and recommendations of people far deeper and broader in the available materials.

What I've provided above is intended as a stop-gap entry point for this and the following four pages. These are books that are at hand because I either have the physical book (I went digital, but kept what I held dearest) or it's more recent in my lists.